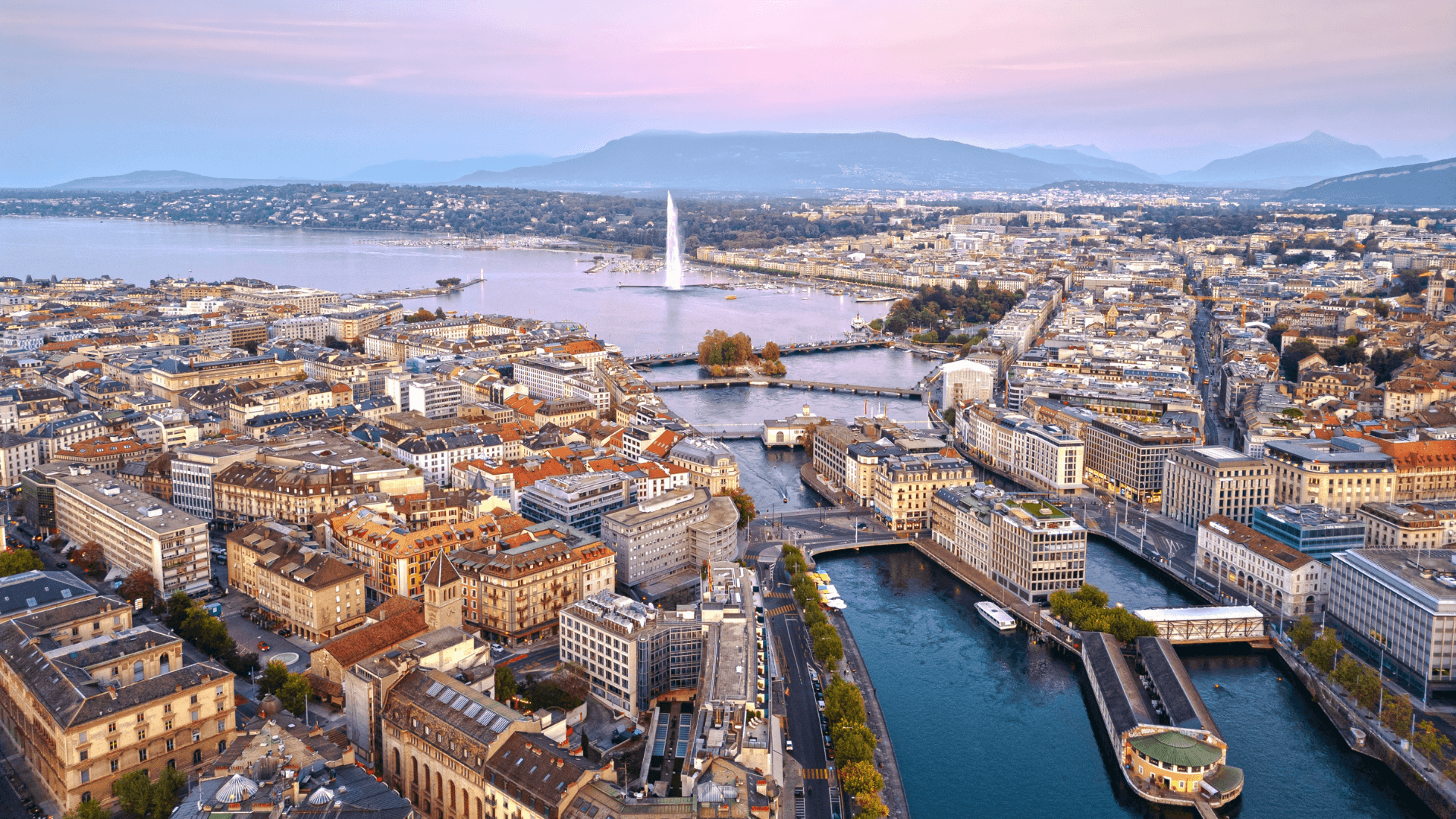

Geneva is not cheap, but it is a city that radiates sophistication, beauty and with a huge United Nations presence, is one of the most diverse cities on the planet. When the sun is shining and the city’s lake glitters like burnished silver, it feels like a place where humanity is at its zenith and its moniker as the ‘capital for peace’ feels more than appropriate.

Geneva is also home to any number of NGOs that have a huge influence on our lives, including the International Electrotechnical Commission (IEC), formed in 1906, but still, literally, setting standards for Europe and beyond.

At a moment when trust in digital content is rapidly eroding, the global standards community marshalled by the IEC has never been so important, especially in the new world where AI is upending everything that came before it.

Standards and regulations

Standards and regulations are both important for establishing and maintaining order, but they differ in their legal standing. Regulations are legally binding rules enforced by authorities, while standards are voluntary guidelines that serve as benchmarks for quality and performance.

Standards can be incorporated into regulations, making them legally binding, or they can be adopted voluntarily to enhance quality and interoperability.

This month’s launch of the AI and Multimedia Authenticity Standards Collaboration (AMAS), a multistakeholder initiative led by the World Standards Cooperation (WSC), launched two pivotal papers designed to help navigate the ethical and technical challenges of synthetic media and artificial intelligence.

With generative AI transforming everything from art to audio to political discourse, the initiative arrives with bold ambitions: to lay the global groundwork for standards that can counter misinformation, verify the authenticity of multimedia and help societies distinguish real from fake in an increasingly AI-driven world.

Two roadmaps for a more trustworthy digital future

The first paper, a technical roadmap, provides a comprehensive overview of the global landscape of standards and specifications related to digital media authenticity. It surveys what currently exists, identifies gaps and recommends a forward-looking framework to develop standards that can be adopted across industries.

The second paper, a policy guidance document, is aimed at regulators and lawmakers. It details how international standards can serve as the foundation for governance frameworks that balance innovation with risk mitigation in the age of generative AI. From watermarking AI-generated images to ensuring traceability in video provenance, the paper underscores how standardized approaches can strengthen transparency and accountability.

The Stakeholders Behind the Effort

AMAS is spearheaded by the World Standards Cooperation, a triad comprising the aforesaid IEC, the International Organization for Standardization (ISO) and the International Telecommunication Union (ITU), the latter also serving as the UN’s specialised agency for information and communication technologies.

Not to be sidetracked by any lack of acronyms, a coalition of stakeholders, including the Coalition for Content Provenance and Authenticity (C2PA), the JPEG Group, EPFL, Shutterstock, Fraunhofer Heinrich Hertz Institute, China Academy of Information and Communications Technology (CAICT), DataTrails, Deep Media, and Witness among others are also involved.

Their shared mission is clear: to create interoperable, international standards that can offer a shared language for digital authenticity, one that governments, companies and civil society can rally behind.

Why Standards Matter in the AI Era

Gilles Thonet, Deputy Secretary-General of the IEC said: “International standards provide guardrails for the responsible, safe and trustworthy development of AI. These white papers lay the foundation for systems that prioritize transparency and human rights by mapping existing standards and identifying the gaps we urgently need to fill.”

With AI-generated deepfakes already influencing elections, celebrity culture and global conflicts, the stakes are high. Thonet and his colleagues view standards not as bureaucratic hurdles, but as enablers, providing trusted tools for governments and industry alike to ensure AI serves humanity rather than undermines it.

Silvio Dulinsky, Deputy Secretary-General of ISO, emphasized the need for actionable solutions that transcend borders: “People need practical, scalable solutions to prevent and respond to challenges caused by synthetic media. These papers offer that, using standards as a vehicle for global interoperability.”

Defining the Path to Seoul 2025

The release of these documents also sets the stage for a major upcoming event: the International AI Standards Summit, which will take place in Seoul from 2–3 December 2025. This summit aims to accelerate progress on global AI standards by bringing together regulators, technologists and civil society leaders under one roof.

That conference will likely build upon the groundwork laid this week in Geneva, where the conversation moved from abstract ethics to tangible implementation.

As synthetic content grows more sophisticated, and cheaper to produce, the demand for reliable frameworks is no longer just academic. Whether it’s verifying whether a viral video is real or ensuring that AI-generated news articles are labeled as such, standardisation may become the linchpin of digital trust.

Interoperability as a Cornerstone

One of the key challenges the AMAS papers tackle is interoperability: ensuring that content provenance technologies, authenticity markers, and verification systems can work across platforms and geographies.

This is especially important for social media networks, news organizations, and governments tasked with moderating content at scale. Without common standards, efforts to identify and manage AI-generated content will remain fragmented—and therefore less effective.

Importantly, the papers stress the need to develop tools that are technically robust yet user-friendly, so that authenticity signals can be detected by both machines and humans. This dual-layer approach is seen as vital for democratizing access to trustworthy information.

Balancing Innovation and Responsibility

While some critics of AI regulation fear a chilling effect on innovation, AMAS positions international standards as a balancing mechanism—enabling both creative freedom and ethical oversight.

Rather than pushing for a one-size-fits-all global law, the collaboration’s policy paper envisions a standards-based ecosystem that governments can adopt and adapt, in accordance with local legal frameworks and cultural norms.

At its core, the initiative is a call for shared responsibility—recognizing that no single entity, be it a tech giant or national government, can address the challenge of AI-generated misinformation alone.

A Defining Moment for AI Governance

The Geneva launch marks a turning point in how the international community is preparing to address the darker dimensions of artificial intelligence. As AI-generated media floods our screens, from realistic fake videos to persuasive AI-written political statements, the tools to detect and manage such content must evolve just as quickly.

By grounding that evolution in international standards, AMAS hopes to prevent a future in which society can no longer trust what it sees, hears, or reads online. For those concerned with the future of digital integrity, the message is the time to build that future is now.

Would you like to write the first comment?

Login to post comments