Most people face long waiting times for psychiatric care, (sometimes years in the UK in the case of assessments and specific psychiatric care) so it's critical that clinicians spend their time doing what matters most, attending to their patients.

Aisel has developed an AI co-pilot for psychiatrists, which aims to cut wait time by streamlining psychiatric intake and documentation with a purpose-built, AI-powered co-pilot.

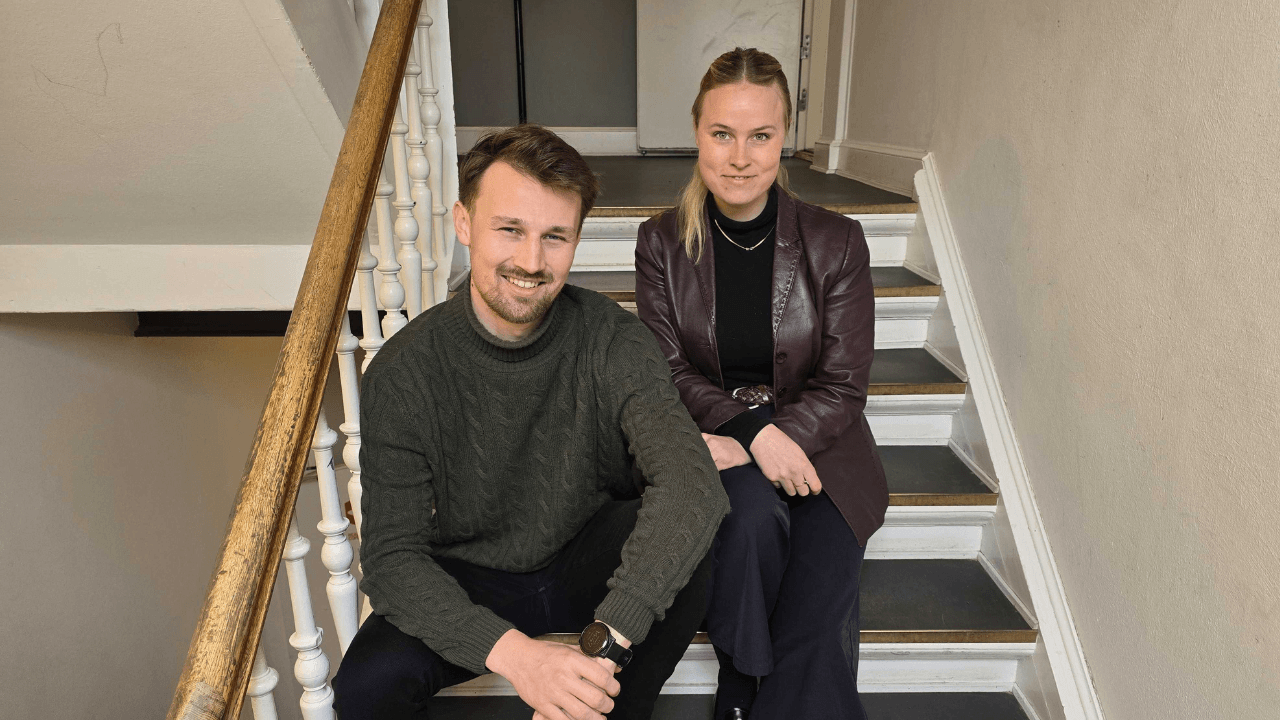

I spoke to Augusta Klingsten Peytz, co-founder of Aisel, to find out more.

Klingsten Peytz is based in Denmark, studied at Copenhagen Business School and INSEAD, where she spent time in France and Singapore.

After graduating, she started my career in consulting, but six months in, I had a concussion that left her sick for six months, both from the injury and the mental health challenges that come with being bedridden.

She recalled:

“When I recovered, I took a step back and asked myself what would make me happy.”

She joined a venture firm that only invested in mental health solutions, which she described as “an amazing way to return to entrepreneurship, which I’ve loved since I was young. From there, I joined an accelerator, and I called up an old colleague and asked if he’d be willing to quit his job and start a company with me - and he did!”

The result is Aisel, an end-to-end clinical documentation suite designed to streamline psychiatric workflows and enhance patient care.

Why build AI for psychiatry clinicians, not general healthcare?

There are a lot of general health intake AI tools. Why build something specific to psychiatry? According to Klingsten Peytz, psychiatry is completely different from somatic health.

“In physical health, you can run tests and scans. In mental health, it’s all subjective. There’s very little you can physically measure.

You rely on the clinician’s judgment, which means you need to spend a lot of time with the patient. But the system doesn’t have the resources. That’s where we come in — to support, not replace.

Also, in psychiatry, two people can have the same diagnosis, like depression, but need totally different treatments depending on their background, circumstances, and history. So time spent understanding them is critical.”

Alicia: Aisel’s AI assessor

Aisel’s AI-powered assessor, Alicia, engages patients in secure, structured conversations before and after consultations, collecting intake data, medication histories, and standardised assessments via written or verbal input, saving up to 35 minutes per new patient.

First, an on-screen AI avatar conducts a pre-consultation conversation with the patient. It's not an AI chatbot, but strictly a one-way means of gathering audio – and, if opted in, video feedback from the patient.

According to Klingsten Peytz, the interview format is built on existing psychiatric best practices, Present State Examination (PSE-9) and ICD-10, and tailored for specific clinics. For example, if a clinic focuses on PTSD, it delves deeper into childhood history.

The intake interview encompasses four sections:

-

Why the patient is there and how they were referred.

-

Recent symptoms.

-

General background: family history, trauma, childhood.

-

Current habits: alcohol, drug use, sleep, etc..

Empowering patients through transparency

When generating the patient summary, how much nuance do you want extrapolated?

Aisel lets patients see their summary intake first in a format that they can edit. for accuracy.

Klingsten Peytz shared:

“It started as a quality-control feature, but patients have said it’s empowering. They get transparency and agency — and that builds trust.”

During sessions, Aisel In-Consultation enables real-time transcription and note generation, allowing clinicians to stay focused on patients while ensuring accurate, clinic-standard documentation.

In-consultation and post-session documentation

After each session, Aisel Dictation lets clinicians record voice summaries, which are automatically transcribed and formatted into structured clinical notes, including medication records and therapy summaries. Aisel predicts it offers a 40 to 60 per cent time reduction per note.

Together, these tools, designed in collaboration with practising psychiatrists and medical advisors, reduce cognitive load, improve documentation quality, and save valuable time across the care pathway.

Prioritising purpose over hype

I was curious whether the AI analysis tracks and interprets voice cues like tone of voice, breathing, sights, pauses, etc.

Klingsten Peytz admits, “At first, I was really excited about that — affective computing, vocal markers, etc. But right now, we’re not doing it.”

“The reason is simple: it’s a nice-to-have, but not essential. The patient will still see a clinician who brings that human intuition. So far, our customers haven’t found those features to be compelling enough to implement.”

This underscores the importance for startups of focusing on the technology’s core purpose — in this case, improving clinical efficiency — rather than getting distracted by flashy, non-essential features.

Private first, public later

So far, Aisel works primarily with private clinic groups. Klingsten Peytz admits, “our primary focus is the private sector because we’re not yet mature enough as a company to handle the bureaucracy required to work with large, complex public systems.”

“We have a handful of customers, and the feedback has been encouraging. Clinicians like the reports and say, “I could have written this myself.”

But building trust in AI takes time. Aisel is also undertaking a large-scale pilot project with a public hospital to study the impact of its tech and tailor a version of the product to their specific needs. Furthermore, it's worth noting that the software targets private clients, where patients are typically non-acute and have a more moderate presentation.

Aisel intentionally avoids use cases involving acute psychiatric episodes.

According to Klingsten Peytz, “If someone is in a manic or psychotic state, they should be in the emergency department, not talking to an avatar.”

Privacy by design

For Aisel, patient privacy is paramount, with every feature built on privacy-by-design principles, and one of the company’s first major investments was in compliance to ensure alignment with regulations such as the EU AI Act.

Its database separates patient names from their data using encrypted identifiers. It also has automatic screening to redact identifiable information.

This is particularly relevant as legal scrutiny of AI companies grows, and startups using AI for mental health services like chatbots and avatars may face unintended privacy challenges.

For example, a recent US court order requires OpenAI to indefinitely preserve all user conversations from ChatGPT’s Free, Plus, Pro, Team, and standard API services — including those users have deleted — due to ongoing copyright litigation with The New York Times.

For a startup using OpenAI to train mental health models, this means that if you are relying on standard API endpoints, any sensitive user data processed through OpenAI may be retained far longer than expected and cannot be deleted, potentially exposing your users’ private information to legal scrutiny and creating significant privacy and compliance risks, especially under regulations like HIPAA or GDPR.

However, the court order explicitly exempts API customers using Zero Data Retention (ZDR) endpoints, which are designed so that data is never stored or logged by OpenAI and is technically impossible to preserve.

Built with clinical partners, not public datasets

In its use of LLMs for the intake avatar and summarisation. Klingsten Peytz explained that “the important part is how we structure and prompt the questions.

That’s machine learning — and it’s based on psychiatric protocols and constant feedback from our customers.”

Aisel doesn’t use external datasets in part because there are a lot of risks with that in mental health — “for example, around 47 per cent of psychiatric diagnoses change after 10 years. We are also seeing a lot of diagnoses overlooked - for example, with ADHD. So many high-functioning women were missed because they didn’t present like the stereotypical "disruptive" kid in school. We’re learning so much, so late.

"So if you train on that data, you’re just reinforcing flaws. Instead, we build everything with and for our clinical partners.”

Aisel is now focused on deepening its work with 10 to 20 partner clinics, with the aim to understand their workflows in detail so that we’re ready to scale in the next phase. Its also staying on top of regulations like the AI Act and GDPR, making sure its compliant at every step - and MDR now that the NHS has given more clarity there.

Klingsten Peytz asserts, “In healthcare, you can’t just "move fast and break things." We’re taking a “build fast, but carefully” approach.”

Lead image: Christian R. Houen and Augusta Klingsten Peytz, co-founders of Aisel. Photo: uncredited.

Would you like to write the first comment?

Login to post comments